What are the elements that make a huge difference in your productivity when you are developing an application? Are there features that you lament not having or that you spend time re-implementing again and again as you go to the next application, the next programming language?

Today I want to talk about what makes a big difference for me when developing applications that are sufficiently large, both in terms of components and data model.

I hope you'll share your thoughts on this topic as well!

Data representation

Properly modeling the domain of an application is crucial. Think about it, the data types that we are defining are going to be used almost everywhere in our application!

What can go wrong?

Here are some of the problems that arise when we fail to model our data correctly:

Consistency

If an Order and an InventoryItem reference the same Product but have a product_id field which can be set to disagreeing values.

Meaning

Imagine a field name with an ambiguous meaning: expiry: Long. Is it a Date, a Duration, what is the unit: Minute, Seconds?

Even if the field is well-typed: expiry: Date, what does this mean? Can I still create an Order for a Product with this expiry date? What if that field is also used to include the product or not in marketing campaigns? Now that field might have one value but 2 meanings.

Precision

How many times do we see String fields where restrictions should apply? For example, newlines are rarely allowed in a name. Accepting them at one end of the system might completely break the UI at the other end of the system, or another system for that matter.

The same goes for the use of Int in Scala for example:

case class Person(name: String, age: Int)

Really, can a Person have -20 as its age? One satisfying thing with Rust is that it knows the price of everything, so there are all sorts of unsigned integers. At least we have a way to represent (and calculate with) natural numbers:

struct Person {

name: String,

// min value: 0, max value 255

age: u8,

}

Coupling

It is very easy to model data that encompasses too many concerns:

case class Customer(

name: Name,

age: Age,

address: Address,

occupation: Occupation,

currentBalance: Balance,

account: Account,

acquiredVia: MarketingCampaign,

openLitigations: List[Litigation],

)

You get the idea... When data mixes too many concerns it implicitly couples all the components that deal with these concerns. This is the recipe for a lot of code churn.

🧰 What do I want?

Data definition

To be precise with data modeling I expect a few things from my programming language:

Structs and enums (+ pattern matching on enums).

Non-verbose data declarations (Scala, Haskell, and Rust do this well), so I don't fear creating a

Namedata type if I need to.Good libraries for common quantities: dates, times, money, memory size.

Data input and parsing

I give bonus points to languages giving me the ability to define well-typed literals at compile-time:

val address = url"http://google.com"

A more elaborate version for defining and parsing correct data is supported by the refined library in Scala:

scala> type ZeroToOne = Not[Less[0.0]] And Not[Greater[1.0]]

defined type alias ZeroToOne

scala> refineMV[ZeroToOne](1.8)

> error: Right predicate of (!(1.8 < 0.0) && !(1.8 > 1.0)) failed

Standard type classes

It should be completely trivial to add derived behavior to a data type for:

Equality.

Optionally ordering or hashing.

Showing, debugging.

Again, Scala / Haskell / Rust, make this very easy.

Data output

One word about using Show / Debug instances. Those instances are fine to display data by default. However, I found it extremely useful to define a specific trait, say Output, dedicated to a domain representation of values. For example, if a Warehouse has a list of loadedDocks which are generally loaded in order, it is nicer to represent:

Warehouse: East-London-1

loaded docks: 1..7

Rather than:

Warehouse: East-London-1

loaded docks: 1, 2, 3, 4, 5, 6, 7

The ability to display things concisely pays off in the long run when writing tests and debugging. This is very domain-dependent but some utility functions around text can be useful:

To pretty print lists.

To pretty print tables (with aligned columns, and/or wrapped text).

To create ranges.

To make a name plural based on a quantity:

n.times() == "3 times".

Serialization

Serialization is the unfortunate consequence of the fact that systems are rarely just isolated islands, they need to communicate with other systems.

Let's start with a 🌶️ take:

Derived instances are an anti-pattern

Now that I've made enemies with 90% of the profession I need to explain my viewpoint 😀. A derived instance for serialization is some code that the compiler generates automatically for a data type to serialize its values. For example:

data Employee = Employee {

name :: Name,

age :: Age,

} deriving (Eq, Show, Encode)

In the example above we simply annotate the Employee data type with Encode then we get the possibility to encode values:

encode (Employee (Name "eric") (Age 100))) ==

"{ 'name': 'eric', age: 100 }"

I will keep the argument short but the main problem with this facility is that we couple our domain model too much with the serialization concern:

We end up defining some attributes only so that it helps the serialization.

Serializing to different formats might place incompatible constraints on some attributes.

We cannot easily support several serialized versions representing the evolution of a protocol over time.

We hand over the serialization to a library that uses meta-programming to decide how to serialize data and might not do it most efficiently.

In the end, serialization becomes a burden and slows down the evolution of an application.

🧰 What do I want?

Rather than having to reconcile domain needs to serialization needs all the time I simply prefer to have the ability to write encoders and decoders manually.

Defining one encoder

That sounds horrible but not that much with a good programming language and some library support:

makePersonEncoder :: Encoder Name -> Encoder Age -> Encoder Person

makePersonEncoder name age = \Person { n, a } ->

array [encrypt (encode name n), encode age a]

I can decide exactly how I want to encode the 2 fields here, as an array or a map, but I can also decide that I want to encrypt the name, with no impact on my domain model.

And if my data type is not fancy, I should be able to access some macros to do the simple, default, case:

makePersonEncoder = makeEncoder ''Person

Wiring everything together

The other advantage of derived instances is the ability to wire all the instances together for a whole data model. Doing it manually is not much fun:

orderEncoder :: Encoder Order

orderEncoder =

makeOrderEncoder

(makeCustomerEncoder (makeNameEncoder stringEncoder)

(makeAgeEncoder intEncoder))

(makeProductEncoder (makeProductIdEncoder stringEncoder)

(makeDescriptionEncoder stringEncoder))

There is however one great advantage! We can now modify this wiring to evolve the serialization independently from the domain model. For example, if there is a new attribute for a Customer, we should still be able to serialize an old customer for the new data model, to communicate with a system that hasn't upgraded yet:

-- old version where the age of the customer was needed, now it's not)

orderEncoderV1 :: Encoder Order

orderEncoderV1 =

makeOrderEncoder

(makeCustomerEncoderV1 (makeNameEncoder stringEncoder))

(makeProductEncoder (makeProductIdEncoder stringEncoder)

(makeDescriptionEncoder stringEncoder))

Even that part can be reduced to a minimum via a library like registry-aeson in Haskell:

-- you just declare the change between the 2 versions

-- all the wiring is done automatically for each version

decodersV1 =

<: makeCustomerEncoderV1

<: decoders

Persistence

Persistence presents mostly the same challenges as serialization in terms of data management with the added complexity of being able to represent and navigate relations:

As pointers in a domain model.

As table relations in a relational model.

My general inclination here is to use simple SQL libraries, not ORMs, or fancy typed-SQL libraries. This means that I have to write tests just to make sure that I don't have a typo in my SQL query but this is not a bad thing! Those tests are also important to check the semantics of my persistence layer:

What should be the unique keys?

What are all the entities that must be deleted when one entity is deleted?

Should there be a failure if I try to insert the same item with different values?

🧰 What do I want?

I mostly want a database library (in combination with a test library) that lets me easily:

Create queries, bind parameters, create database transactions

Manage schema migrations

Deserialize rows (see the section on Serialization)

Create an isolated database with the latest schema

Pinpoint syntax errors

Interact with actual test data

Data generation

Once we start having a large and somewhat complex data model it is important to be able to easily create data of any shape to test the application features. The problem is that:

This is tedious if there are many fields.

This leads to duplication of test code, when assembling large data structures from smaller ones.

The result is that we tend to write less tests than we should.

🧰 What do I want?

One excellent way to avoid these issues is to be able to create data generators for our data model:

personGenerator :: Gen Name -> Gen Age -> Gen Person

personGenerator name age = Person <$> name <*> age

In Haskell, if I have a generator for Name, a generator for Age I can easily have a generator of random data for Person using the wonderful applicative notation.

Then I can go:

ghci> sample personGenerator

Person { name: Name "eric", age: Age 100 }

That's it. I have an example of a Person that I can reuse in many, many tests. If I take the habit of creating generators as I evolve my data model, I allow my coworkers to easily create test cases outside of the feature I was working on.

There are two difficulties with that approach:

Creating a generator for a

Personseems like it could be automated.Wiring generators together is not fun

Changing just one generator for a given test is tedious

Wait, that's the same problem as the serialization problem! And it has a similar solution 😊.

Components

Once we nail our data model we still have to structure the functionalities of our application.

Great news! There's an excellent tool for this task, and it has been well-known for quite some time:

Separate the interface from the implementation, Luke

There are notions of interfaces in many programming languages but with all sorts of twists (I should do a distinct post just on that subject):

Does it use nominal typing or structural typing?

Does it have associated types?

Can you specify exception types separately?

Similarly, on the implementation side, there are many differences:

Are modules generative or applicative?

Is the interface declaration part of the implementation type (Java), or separated (Haskell, Rust, Scala)?

Are modules first-class entities (Scala, not Haskell)?

Can we have an unlimited amount of implementations for a given interface (not in Haskell when using type classes)?

🧰 What do I want?

The bare minimum is the possibility of having interfaces and a way to check or declare that an implementation conforms to an interface.

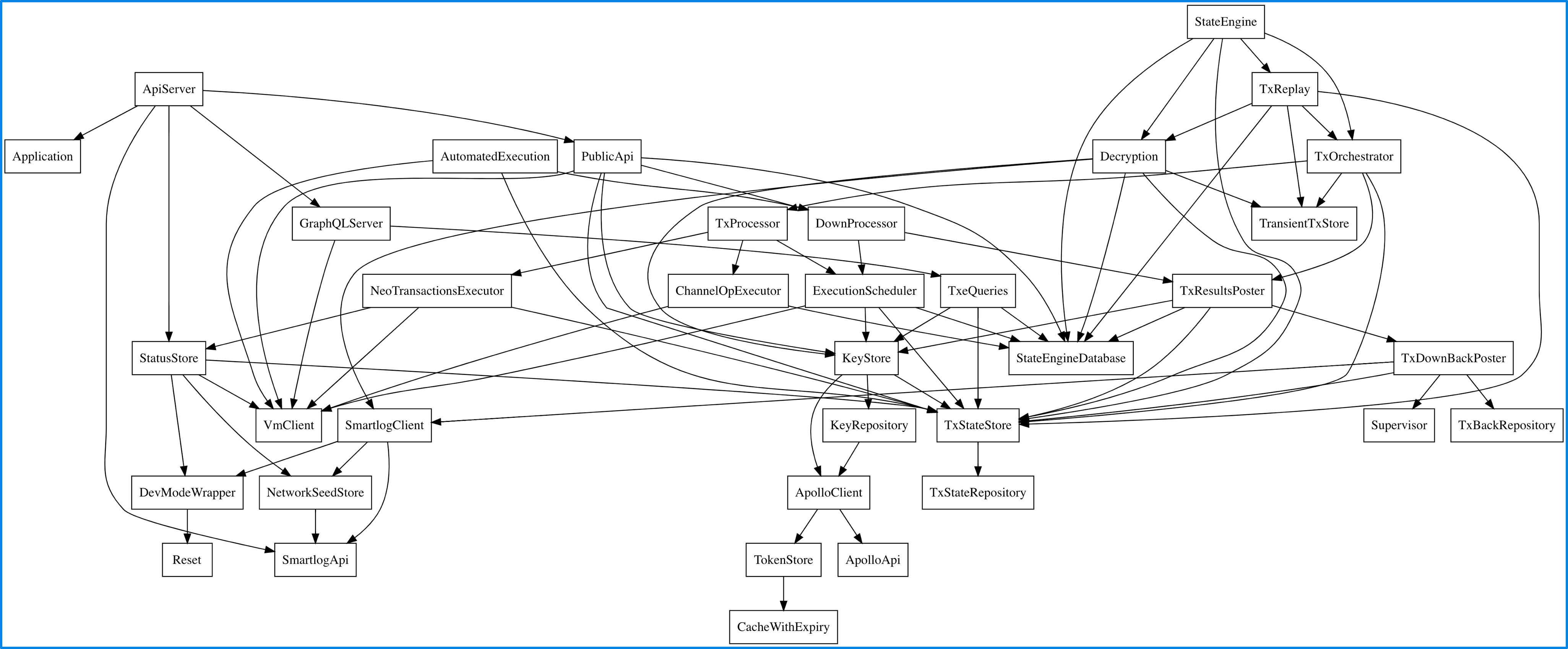

Above this, I want a way to do dependency injection. We can think about components in an application as a graph where each component, as an implementation, depends on other components, as interfaces:

For any reasonably complex and deep graph, I want to be able to easily switch implementations anywhere in the graph:

To change how logging is done.

To run entirely in memory.

To wrap a given component and make it return random errors to test the reliability of my system.

To run my system in different configurations: production, development, and demonstration.

To test a given sub-system in isolation from the rest (integration testing).

The consequences of not being able to easily do this include:

Time lost rewiring the system.

Not running some experiments because it is too tedious to do so.

Write fewer integration tests and only rely on top-level system tests.

Have fewer possibilities to test complex scenarios.

Spend more time adjusting the "wiring code" when refactoring.

Resources

Back-end systems always end up dealing with limited resources:

Files, sockets, database connections, memory.

Time, when waiting on IO operations.

Time, when executing computation-intensive calculations.

There are good ways to deal with all these issues.

🧰 What do I want?

Safe resources closing

Closing files, database connections, etc... should be done as soon as possible and be robust in the face of exceptions or concurrency. This is not as easy as it seems.

I like the work that has been done in Scala for resource management and I am still on the fence on how the best way to deal with this in Rust. The pattern is called RAII ("Resource acquisition is initialization") but I still wonder if we are abusing lifetimes and memory management to support resource management when:

The scopes can be different.

The need for IO might require some async functionalities (an

asyncDropis still being debated).

Async/concurrency

There are different ways to support async programming:

As a library: Scala

As a library + a runtime: Haskell

As a language construct + a runtime: Rust

All those approaches have complexities and pitfalls. It seems to me that one way to keep our sanity when using concurrency is to use structured concurrency. This article, "Go statement considered harmful", is widely recognized as a great resource to learn about it, so go read about it if you haven't!

Scala would also be my favorite choice at this stage, with functional libraries like the Typelevel libraries or the ZIO ones.

Streaming

Good streaming libraries allow us to iterate on infinite amounts of data without blowing up our memory:

In Haskell, I liked streaming for its simplicity and elegance.

In Scala, I would be tempted to use fs2 or zio-stream.

Traverse

traverse is such a useful function. If you start learning functional programming and then discover the traverse function, there is no turning back. It makes me a bit sad that traverse in Rust comes in different forms and with a less than perfect type-inference:

// for a vector

let results: Result<Vec<ValidValue>> =

values.iter().map(|v| check(v)).collect();

// for an option

let result: Result<Option<ValidValue>> =

optionalValue.map(|v| check(v)).transpose()

In Haskell:

results = traverse check values -- or: for values check

result = traverse check optionalValue -- or: for value check

In Haskell, this is the same concept, whatever the container, whatever the effect!

🧰 What do I want?

A good functional programming library gives us an incredible vocabulary to work with data and effects. map, traverse, foldMap, foldLeft, Monoid, Either, Option, etc... are invaluable to write concise and correct code. This goes a bit against the grain of languages encouraging (controlled) mutation like Rust or which don't have great support for functions and type inference (Java).

What about you?

I gave you my laundry list of language features and libraries that I like having at my fingertips to productively develop robust applications. You probably have your own list of features that I did not cover and I'm genuinely curious to know about them. Please share!